DataOps: Empowering the Future of Enterprise Data Management

- Explores the role of DataOps in modern data management practices.

- Highlights the integration of automation and collaboration in data pipelines.

- Discusses how DataOps enhances data quality, governance, and agility.

Data management has become a challenge for everybody, not just every organization but also been a bottleneck for data engineers, architects, and analysts.

The nature of the challenges has been changing day by day from procurement to storage, to high volume storage, from transaction to deriving insights, and to make the whole process fast and efficient.

Organizations are looking for a new approach to overcome these data hurdles. The arrival of DataOps has enabled organizations to streamline data ecosystems in an unprecedented way. One of the most convenient ways is by bringing together many data management functions under one cohesive umbrella.

DataOps recognized as innovation trigger for Data Management by Gartner in 2018

To make data analysis more useful and interesting, companies are replacing old ways of handling data with a new method called DataOps. DataOps is about working together as a team and using technology to automate tasks, which helps to get better insights from data faster.

DataOps is a confluence of advanced data governance and analytics delivery practices that encompasses the entire data life cycle, from data retrieval and preparation to analysis and reporting.

Are you still, Confused?

For a better understanding of what DataOps is, We will debunk some popular myths.

First and foremost, DataOps isn’t a technology. Instead of technology, we can consider DataOps as a methodology that combines automation, continuous monitoring, and involvement from both technical and business teams.

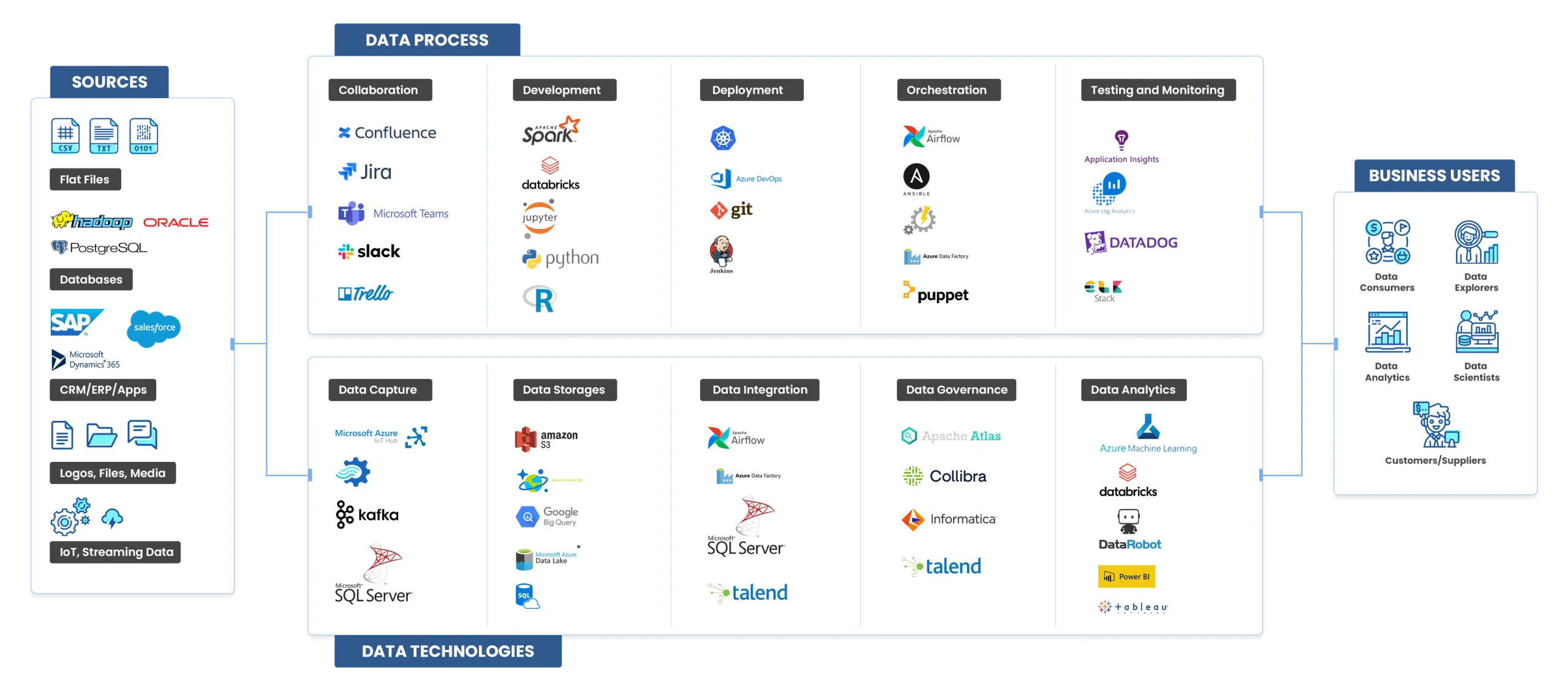

Certain technologies commonly support the implementation of DataOps. For example, collaboration tools and data automation tools (we’ll cover these later).

Another myth is that DataOps is limited to big data or data science applications. The volume of the data won’t be affecting the use of DataOps in your current or upcoming project, and also, the tools might vary.

Lastly, many think DataOps is just DevOps for data. It’s more than that. It combines agile development and DevOps while focusing on continual monitoring and maintenance. Assume you are working on a water pipeline; the idea is to keep the water flowing till you finish the plumbing work.

Six Data Management Best Practices with DataOps

DataOps are a set of principles, practices, and technologies that provide the data which accelerates analytics. In one dimension, many of these practices seem straightforward, but collectively, they produce noticeable results for maximizing data value.

1. Audit Your Data Environment

Start with auditing your existing data environment and processes before starting your DataOps initiatives. This process will help you answer the following questions.

Do you know your data? Do you trust your data? Are you able to detect errors quickly? Can you make changes incrementally without “breaking” your entire data pipeline? Organizations that have successfully deployed DataOps know what data assets they have access to, its quality and use their data to its maximum potential.

- Identify Gaps: The goal is to find the inefficiencies, errors, manual workarounds, and error-prone jobs to make the free flow of data from source to destination. Also, assess how efficiently code moves in the development life cycle from development to test and production.

- Map Processes: It may be tedious to map all your data pipelines unless they are messy and complex. But the process map will give a clear picture helping the data teams identify waste and inefficiency in data operations. Help data executives invest time effectively in data operations.

2. Agile Development

Agile is one of the key inspirations behind the DataOps methodology. Agile is a way to manage a project by breaking it up into several phases. It’s always good to start small to avoid getting overwhelmed by challenges occurring in each stage. Experts recommend starting with the single most significant barrier obstructing the delivery of analytic output from the data environment audit.

Therefore focus on the critical barrier in your data operations and develop a plan to break the gridlock. It may require re-engineering the processes or new technology to automate and optimize the steps. Evaluate the outcomes and devise a plan to pinpointing, resolving, and monitoring the key barriers.

3. Automate Data Related Processes

In the contemporary world, automation becomes crucial in any business process. The core issues that data teams face are changing source data format or unavailability of source data, affecting the applications using this data.

Handling the source data changes in the least disruptive way is not a cakewalk. Downtime caused by one source change leads to disruption of multiple systems and affects various teams. DataOps rescues the enterprise data teams leveraging apps that automatically detect and update the source data changes reducing downtime to minimal, if possible, zero.

4. Balanced Data Governance

According to McKinsey, most of the data governance programs today are ineffective. Strict governance processes slow down the development and data pipelines. They also restrict the users from implementing new technologies. The users will only have access to limited technologies that require a high learning curve and having limited features resulting in low productivity. These practices may lead to security breaches users adopting Fake IT practices(use of IT systems, software, applications, and services without explicit IT department approval.)

DataOps addresses governance by moving beyond traditional approaches. Its one size fit for all approach towards data management is a flexible, distributed approach. It allows organizations to decentralize responsibility for data governance. Moreover, it provides the company with effective tooling for robust access controls, observability, alerting, and automation. It also lowers the skill level required to use data enabling data teams to self-serve.

5. Aligning with business objectives

The primary goal of DataOps is to align with business objectives. This means serving customers well while continuously delivering value. There are many ways to align with the business. Implement specific processes like deploying scrum teams and performing a quarterly consolidation by gathering the organizations that follow the same business practices. It will help identify, consolidate, and prioritize cross-functional requirements for analytic solutions.

According to DataOps experts, data teams need to measure the outcomes, not just the output. The teams put a lot of effort into measuring the cycle times for producing the data sets or models that overlook the commercial impact. Data teams should focus on the technical processes while achieving the business outcomes.

6. Implement Collaborative Data Development Tools

DataOps tools enhance the collaboration that helps maximize the reusability and automate the processes. They equip Data teams to scale, increase development capacity, accelerate cycle times, reduce errors, and improve data quality. DataOps borrows most of the tools used in DevOps. These tools are helpful in application or data development.

Here is the diagram to showcase the wide range of Open Source & Premium Tools and Frameworks That DataOps Supports.

Conclusion:

In this post, I tried to cover the best practices, tools, and technologies to improve data management with DataOps. The advantages of DataOps surpass the use of data management tools also to embrace cultural and organizational improvements. The goal is to reduce defects and improve data scalability and security, giving confidence to end-users in data and analytical output.

What makes DataOps a buzzword is the processes and the data that can be used everywhere. However, the right tools and technologies are part of the DataOps equation. If you are looking for robust tools and services that can help improve your DataOps, connect with us.

Saikiran Bellamkonda is Marketing Manager at Anblicks, responsible for overseeing GTM strategies, growth marketing, corporate marketing, strategic alliances, and marketing operations globally. He is deeply passionate about leveraging data-driven insights and exploring the transformative impact of AI on traditional business processes. Saikiran actively experiments with marketing technologies to drive added value and efficiencies. He holds an MBA degree with a focus in Marketing. Outside of work, Saikiran is an avid traveler and fitness enthusiast.