8 Ways to Improve Decision Making and Cut Cost with Better Data Quality

TL;DR

- Uncover accurate data through proper collection and validation.

- Discover the impact of clear data governance and accountability.

- Predictive analytics is driving personalized policies and enhancing customer experience.

The term “Data Quality” on the search engine results in six million pages. Which clearly expresses the importance of data quality and its crucial role in the decision-making context. However, understanding the data helps classify and qualify it for effective use in the required scenario.

Understanding the Quality of Data

Good quality data is accurate, consistent, and scalable. Data should also be helpful in decision-making, operations, and planning. On the other hand, lousy quality data can cause a delay in deploying a new system, damaged reputation, low productivity, poor decision-making, and loss of revenue. According to a report by THE DATA WAREHOUSING INSTITUTE™, poor quality customer data costs U.S. businesses approximately $611 billion per year. The research also found that 40% of firms have suffered losses due to insufficient data quality.

Organizations worldwide are heavily investing in data management and processing to achieve good quality data, but the real problem lies in defining what those qualities are. In any case, most cite several attributes that collectively characterize the quality of data.

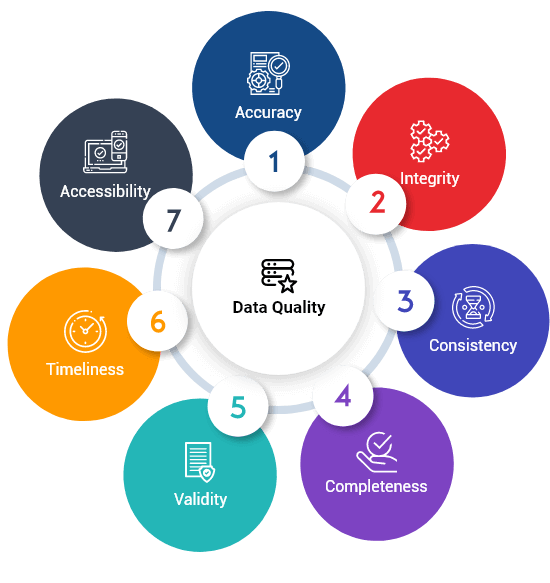

Seven characteristics that define data quality:

- Accuracy: Does the data accurately represent the real-world object?

- Integrity: Has the data remained intact and undamaged between updates?

- Consistency: Is the information stored across the systems consistently?

- Completeness: How comprehensive is the data?

- Validity: Does the information match a specific format or range defined by the business?

- Timeliness: Is the information up to date? Can it be used for decision-making?

- Accessibility: Is the data easily accessible, understandable, and usable?

Several elements determine data quality, and each organization prioritizes the features based on their needs. This could vary by industry to industry based on the stage and growth or even its current business cycle. However, The key is to define the crucial elements while evaluating the data. These characteristics define the quality & accuracy of data. Thus, it can help better position organizations to use this data effectively and achieve their business goals.

Following are the ways to ensure better data quality:

1. Recognize the importance of data quality

The primary purpose of data is to fuel business. Rather than making the IT department control the data quality, organizations must better equip the prime users to define the quality data’s parameters. If business intelligence is closely linked with the underlying data, there are better chances of adapting effective methodologies to help companies choose critical data on priority.

2. Avoid singular thinking

Accuracy will not be the same for all types of data. There is no one-size-fits-all policy for data quality. Data comes from different sources, and therefore, not all forms of data share the same quality or same metrics. For instance: While doing sentiment analysis on social media data, 80% of accuracy is sufficient, whereas it is not sufficient for industries like BFSI. Therefore it is preliminary for the data to be fine-tuned before analysis.

3. Focus on each stage of the data journey

Every organization wants to become data-driven with a holistic approach to adopting an enterprise-level data strategy. Moreover, they also want to optimize their technology investments and cut their costs. In such cases, the company should consider data as an asset to derive valuable insights.

4. Avoid unnecessary data

Organizations capture and use data every day across a variety of operations. The more data they have, the larger the margin for error will be. Organizations need to accept the reality that data isn’t always perfect. Understanding this will enable the businesses to spot the difficulties, build on their success, and locate the problems quickly – even before they happen.

5. Take responsibility

Data varies across organizations depending on their size, business model, fiscal health, and data strategy. Everyone in the organization is responsible for poor data quality. It’s a business problem, and the IT department alone can not be held accountable. By taking control of the data quality, companies can improve efficiency, cut costs and improve decision making.

6. Data pipeline design to avoid duplicate data

Duplicate data can be a whole or part of the data created from the same data source. Human errors cause most data duplications. This results in inaccurate reporting, lost productivity, and wasted marketing budget. A clear, logical data pipeline needs to be created at the enterprise-level and shared across the organization to avoid duplication.

7. Enforcement of data governance strategy

The most effective way to improve data quality is to define the who, what, how, when, where, and why of the data. It is also essential to make sure everyone in the organization abides by these policies. The policies should be enforced by clearly documenting them so that they are accessible to the employees. This will not only improve security and compliance but also helps in improving business performance.

8. Invest in internal training

This could be a transformative approach. Attaining good data quality requires expertise and experience, which is far-fetched for an entry-level executive. This can be achieved through formal training. For competitive advantage, it’s critical to train their teams, manage data correctly, recognize its inherent value and encourage teams and executives to learn the basic concepts, principles, and quality management practices. This helps understand the benefits of good quality data and the costs incurred due to insufficient data quality.

Authors Pick

According to the study conducted by KPMG Global, 84% of the CEOs are concerned about the quality of data that they rely on while making decisions. As the CEOs are the sole decision-making authority of the organization, it is crucial to ensure trust and transparency regarding the quality of data. This will enable organizations to save time, reduce costs, make informed decisions, and achieve accurate analytics to improve business performance.

Saikiran Bellamkonda is Marketing Manager at Anblicks, responsible for overseeing GTM strategies, growth marketing, corporate marketing, strategic alliances, and marketing operations globally. He is deeply passionate about leveraging data-driven insights and exploring the transformative impact of AI on traditional business processes. Saikiran actively experiments with marketing technologies to drive added value and efficiencies. He holds an MBA degree with a focus in Marketing. Outside of work, Saikiran is an avid traveler and fitness enthusiast.