Knowledge Graph RAG: The Missing Layer in Enterprise AI

TL;DR

- RAG struggles with consistency and deeper reasoning in complex enterprise environments.

- Knowledge Graph RAG fixes this by linking information through entities and relationships, giving AI clearer context.

- The result is more accurate, explainable answers, ideal for scenarios where precision and traceability matter.

The rise of Large Language Models (LLMs) has pushed Retrieval-Augmented Generation (RAG) into the mainstream. For many organizations, RAG became the fastest and safest way to bring generative AI into production, without losing control of data.

But as enterprises scale AI to mission-critical use cases in finance, healthcare, supply chain, operations-traditional RAG starts to show limitations.

- Answers become inconsistent

- Multi-step reasoning fails

- Compliance demands prove difficult

- Business context gets lost

- Different systems produce conflicting outputs

This is exactly where Knowledge Graph RAG (KG-RAG) emerges as a game-changer, and begins to deliver far more reliable outcomes.

In this blog, we’ll break down what knowledge graphs are, how KG-RAG differs from traditional RAG, when organizations should consider using it, and what an implementation pipeline looks like.

What Is a Knowledge Graph?

A knowledge graph is a network of real-world entities and the relationships between them. It uses a graph-based data structure, with “nodes” representing entities ( such as people, places, or objects) and “edges” representing connections or relationships between them. This structure enables machines to understand the context and meaning of data, making it useful for things like smart search results, recommendation systems, and AI applications.

Knowledge graphs help bring order to complex systems by explicitly capturing:

- Business entities (customers, products, services)

- Their relationships (owns, interacts, depends on)

- Context around each entity

Enterprises have used KGs for years for fraud detection, recommendation systems, compliance, and master data management. But with LLMs, KGs have become even more powerful, adding a structured reasoning layer that traditional text-retrieval methods cannot offer.

What Is Traditional RAG (and Why It’s Not Enough)?

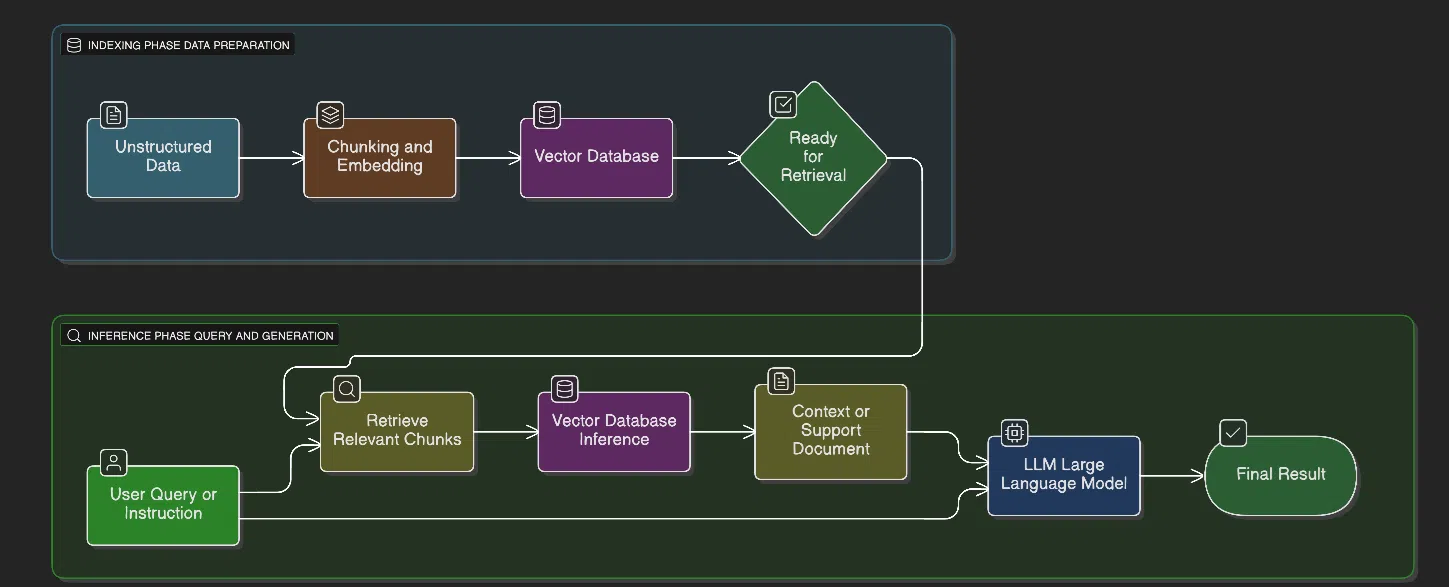

Traditional RAG follows a simple loop:

- Retrieve relevant documents

- Feed them into an LLM

- Generate an answer

RAG retrieves relevant text, but not relationships. Vector databases help, but they aren’t perfect.

Chunking is always a tradeoff:

- Large chunks → more context but more noise

- Small chunks → cleaner but missing details

- Too much metadata → inefficiency

No chunk size solves all problems.

However, it has limitations:

- No understanding of relationships: LLM can read text about “Service A depends on Service B” but cannot reason graphically.

- Fails on multi-hop reasoning: Example: “If Database X goes down, which business units are impacted?” Traditional RAG retrieves isolated chunks—it does not compute dependency chains.

- Inconsistent answers: Different documents may contradict each other; RAG does not unify facts.

- Lacks explainability: Retrieval shows chunks, but cannot show why the model reached a conclusion via logical paths.

- Poor fit for structured interconnected enterprise data

This gap is exactly what Knowledge Graph RAG is designed to fix.

How Knowledge Graph RAG Works (and why it's powerful)

KG-RAG enriches RAG by adding a structured knowledge layer.

Think of it this way:

Traditional RAG → retrieves text Knowledge Graph RAG → retrieves facts + relationships + context

KG-RAG process:

- Convert unstructured text into entities and relationships

- Store them in a graph database (Neo4j, Neptune, TigerGraph)

- LLM uses hybrid retrieval:

- Graph queries for accurate factual reasoning

- Vector search for relevant context

- Combine both for a final answer

Enterprise Benefits:

- Multi-hop reasoning: Graphs naturally support questions like:

- “Which suppliers indirectly impact Product Y?”

- “Show all systems affected if API Z fails.”

- Traditional RAG cannot reliably handle this.

- Consistency and a unified enterprise model: A KG becomes a single source of truth across departments.

- Explainability: Every answer can show the relationship path the model used.

- Strong fit for regulated industries: Auditable, transparent, structured reasoning → suitable for finance, insurance, healthcare.

- Blends structured + unstructured knowledge: Most enterprises have both; KG-RAG allows them to work together.

When Should Organizations Use Knowledge Graph RAG?

Scenario

Use RAG

Use KG-RAG

Quick Q&A over unstructured docs

Structured enterprise data

Relationship-heavy questions

Multi-hop reasoning

Need explainability

Compliance / accuracy critical

Data updates very frequently

Simple chatbot for PDFs

How to Implement a Knowledge Graph RAG Pipeline

1. Data Preparation & Preprocessing

This stage converts raw documents into a standardized format ready for automated analysis.

- Data Collection: Gather unstructured data sources (PDFs, text files, emails, web pages, etc.).

- Text Cleaning: Perform basic cleaning like removing HTML tags, headers, footers, and non-text elements.

- Chunking: For very large documents, split the text into smaller, manageable chunks or segments. This is crucial for models with limited context windows (especially LLMs).

- Tokenization & Segmentation: Break the text down into sentences and words (tokens), and potentially perform lemmatization or stemming to reduce words to their base form.

- Schema Definition: Define the ontology or schema—the allowed types of Entities (Nodes) and Relationships (Edges)—before extraction. This guide helps constrain the extraction process

2. Information Extraction (The NLP/LLM Core)

This is the most critical stage where the Subject-Predicate-Object (SPO) triples are extracted from the text.

A. Entity and Relation Extraction

- Name Entity Recognition (NER): Identify and classify key entities (e.g., Person, Organization, Location, Concept). Traditional methods use rule-based systems or dedicated deep learning models (like those in spaCy or NLTK), but LLMs are increasingly used by prompting them to output entities in a structured format (like JSON) based on the defined schema.

- Relationship Extraction (RE): Identify the connections between the extracted entities. For instance, in the sentence “Elon Musk is the CEO of Tesla,” the relationship is (Elon Musk, is_CEO_of, Tesla). Again, specialized RE models or LLMs with a constrained output (e.g., using Pydantic schemas) are used to extract the predicate between two entities.

B. Resolution and Normalization

- Coreference Resolution: Resolve pronouns and other references to the correct named entity. For example, linking “he” or “the company” back to the specific Person or Organization.

- Entity Linking/Disambiguation: Ensure that multiple textual mentions of the same real-world concept map to a single, unique Node. For example, merging “Apple” (the company), “Apple Inc.,” and “the tech giant” into one Organization node. This avoids redundant nodes in the final graph.

3. Knowledge Graph Construction & Storage

The extracted and normalized triples are now built into the graph structure.

- Triple-to-Graph Conversion: Each extracted SPO triple becomes a part of the graph:

- Subject and Object → Nodes

- Predicate → Edge

- Storage: Load the graph data into a Graph Database (e.g., Neo4j, ArangoDB, Amazon Neptune, etc.). These databases are optimized for storing and traversing relationships. Libraries like LangChain and LlamaIndex offer tools and integrations (e.g., LLM Graph Builder) to automate this conversion and loading process directly using LLMs.

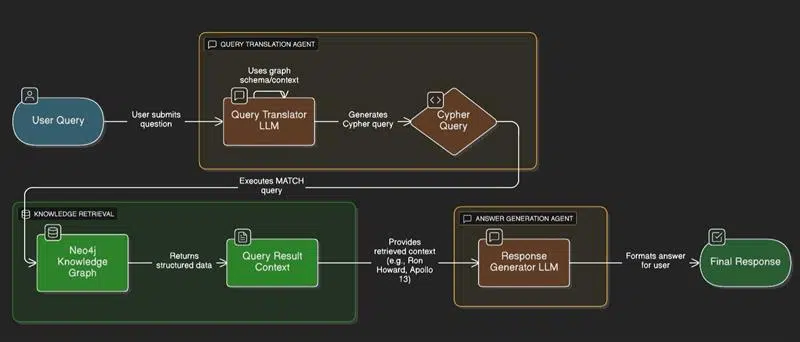

4. Querying, Reasoning & Application

The final stage is leveraging the organized knowledge.

- Querying: Use the graph database’s query language (e.g., Cypher for Neo4j) to find complex connections and patterns that are impossible to find in raw text.

- Inference/Reasoning: Apply rules or Graph Neural Networks (GNNs) to discover new, implicit relationships. For example, if A → WORKS_AT → B, and B → LOCATED_IN → C, you can infer A → ASSOCIATED_WITH_LOCATION → C.

- Applications: Integrate the KG with generative AI systems.GraphRAG (Retrieval-Augmented Generation) uses the KG to retrieve specific, factual, and multi-hop context to ground an LLM’s response, significantly improving accuracy over simple text-chunk RAG.

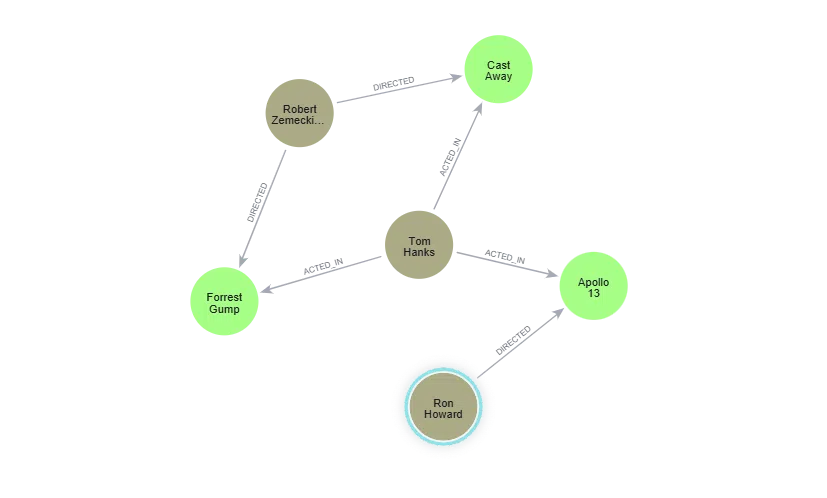

Scenario:

User Query:

“Who directed the movie Tom Hanks acted in where he played the character ‘Jim Lovell’?”

Final Response:

“The movie Tom Hanks acted in, where he played the role of Jim Lovell, was Apollo 13, and the director was Ron Howard.”

Conclusion

Traditional RAG is great for surface-level Q&A. But enterprises need:

- Reliability

- Contextual intelligence

- Traceability

- Decision support

- Cross-functional reasoning

Knowledge Graph RAG unlocks a level of intelligence that traditional RAG simply cannot provide. It serves as a the bridge between LLMs and enterprise data systems, enabling models to “understand” how your business operates.

Knowledge Graph RAG represents the next evolution of enterprise-grade AI by bringing structure, reasoning, and trust to generative systems.. By grounding LLMs in trusted, interconnected knowledge, organizations can move beyond Q&A chatbots.

As a Principal Software Engineer at Anblicks, Vinit Prajapati specializes in leading enterprise application development, translating complex business needs into robust, growth-oriented technical solutions. His 15+ years in the IT industry are defined by extensive expertise in advanced web and cloud native applications, and intelligent application development.